Override the TFS Team Build OutDir property in TFS 2013

I’ve blogged twice before about the OutDir MSBuild property set by Team Build and I’ve recently discovered that with the default build process templates included with Team Foundation Server 2013, the passing of the OutDir can be disabled via a simple Team Build process parameter.

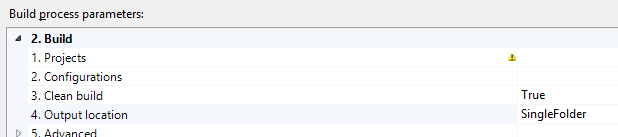

The parameter I am referring to is the “Output location”:

This parameter’s default value, “SingleFolder”, gives the traditional Team Build behaviour – the OutDir property will be specified on the MSBuild command-line and, unless you’ve made other changes, all build outputs will be dropped into this single folder.

Another value this parameter accepts is “PerProject” but this name can be slightly misleading. The OutDir property will still be specified on the MSBuild command-line but Team Build will append a subfolder for each project that has been specified in the Build Definition. That is, you may choose to build SolutionA.sln and SolutionB.sln from a single Build Definition and the “PerProject” option will split these into “SolutionA” and “SolutionB” subfolders. It will not output to different subfolders for the projects contained within each solution – for this behaviour you should specify the GenerateProjectSpecificOutputFolder property as an MSBuild argument as I’ve blogged previously.

The value of the “Output location” that you’ve probably been looking for is “AsConfigured”. With this setting, Team Build will not pass the OutDir property to MSBuild at all and your projects will all build to their usual locations, just like they do in Visual Studio – presumably to a \bin\ folder under each project. With this setting, it is then your responsibility to configure a post-build target or script to copy the required files from their default build locations to the Team Build binaries share. For this purpose, Team Build provides a “TF_BUILD_BINARIESDIRECTORY” environment variable specifying the destination path to use. There are also some other environment variables populated by Team Build 2013 documented here.

At the end of the build process, Team Build will then copy the contents of the TF_BUILD_BINARIESDIRECTORY to either the UNC path drop folder, or to storage within the TFS Collection database itself as you’ve chosen via the Staging Location setting on the Build Defaults page.

However, before you rush away to use this new capability, consider that the MSBuild, or more accurately the set of Microsoft.*.targets files used by almost all projects, already contain a great quantity of logic for handling which files to copy to the build drop. For example, Web Application projects, will copy the contents of the \bin\ folder and all the other content files (eg css, javascript, and images) whilst excluding C# code files, and the project file. Instead of re-implementing this behaviour yourself, leverage what MSBuild already provides and use the existing hook points to adjust this behaviour when you need to alter it for your situation.

If you’re interested, you’ll find that this new “Output location” behaviour is now implemented in the new RunMSBuild workflow activity, specifically within its RunMSBuildInternal private nested activity.