Category: PowerShell

Upgrade Visual Studio Scrum 1.0 Team Projects to version 2.0 on TFS 2012

Team Foundation Server 2010 shipped with two default Process Templates, one for Agile and another for CMMI, but Microsoft also provided a third template for Scrum teams as a separate download. With the recent release of Team Foundation Server 2012, the latest version of this additional template (Microsoft Visual Studio Scrum 2.0) is not only included in-the-box but is also now the default template for new Team Projects.

If you move from TFS 2010 to TFS 2012 and upgrade your existing Team Projects in the process, your existing Microsoft Visual Studio Scrum 1.0 Team Projects will stay as version 1.0. The new and very improved Web Access in TFS 2012 will give you the option to modify the process of an existing project slightly to enable some new TFS 2012 features but your projects will still be mostly version 1.0. A feature-enabled Scrum 1.0 Team Project will still differ from a new Microsoft Visual Studio Scrum 2.0 Team Project in these aspects (of varying impact):

- Using Microsoft.VSTS.Common.DescriptionHtml field for HTML descriptions work items instead of the new HTML-enabled System.Description field.

- Missing ClosedDate field on Tasks, Bugs and Product Backlog Items.

- Missing extra reasons on Bug transitions.

- Sprint start and finish dates still in Sprint work items, instead of on Iterations.

- Queries still based on work item type instead of work item category.

- Old Sprint and Product Backlog queries.

- Missing the new “Feedback” work item query.

- Missing extra browsers and operating systems in Test Management configuration values.

- Old reports.

While these differences may be subtle now, they could become critical when adopting third party tooling designed only to work with the latest TFS process templates, or when trying to upgrade to the next revision of TFS and making the most of its features. For me though, having existing projects stay consistent with new Team Projects I create is probably the most important factor. As such I’ve scripted most of the process for applying these changes to existing projects as they can be rather tedious, especially when you have many Team Projects.

The script and related files are available on GitHub.

To use the script, open a PowerShell v3 session on a machine with Team Explorer 2012 installed. The user account should be a Collection Administrator. The upgrade process may run quicker if run from the TFS Application Tier, in which case the PowerShell session should also be elevated. Ensure your PowerShell execution policy allows scripts. Run the following command:

<path>\UpgradeScrum.ps1 -CollectionUri http://tfsserver:8080/tfs/SomeCollection -ProjectName SomeProject

Swap the placeholder values to suit your environment. The ProjectName parameter can be omitted to process all Team Projects or you can specify a value containing wildcards (using * or ?).

Aside from fixing most of the differences listed above, the script will copy Sprint dates to their corresponding Iterations and also copy the description HTML from the old field to the new System.Description field. The script will also map the default Team to the existing areas and iterations. The Sprint work item type will remain in case you have saved important Retrospective notes as the new TFS 2012 template doesn’t not have a corresponding field for this information.

One step my upgrade script doesn’t do yet is upload the new Reports but that can be achieved just as easily with the “addprojectreports” capability of the TFS Power Tools (the Beta version works with RTM).

Also, for anyone who used TFS 11 Beta or TFS 2012 RC and has created Team Projects based on the Preview 3 or Prevew 4 versions of the Scrum 2.0 template, my script will also upgrade those projects to the RTM version of the template. I later plan to implement a similar script to upgrade existing Agile 5.0 Team Projects to the new Agile 6.0 process template.

Warning: If you have customized your work item type definitions from their original state (eg added extra fields) there is potential for the upgrade to fail or maybe even data to be lost. However I have upgraded at least 10 Team Projects so far, all successfully. As the script is written in PowerShell, the implementation is easily accessible for verification or modification to suit your needs.

PowerShell Remoting, User Profiles, Delegation and DPAPI

I’ve been working with a PowerShell script to automatically deploy an application to an environment. The script is initiated on one machine and uses PowerShell Remoting to perform the install on one or more target machines. On the target machines the install process needs the username and password of the service account that the application will be configured to run as.

I despise handling passwords in my scripts and applications so I avoid it wherever possible, and where I can’t avoid it, I make sure I handle them securely. And this is where the fun starts.

By default, PowerShell Remoting suffers from the same multi-hop authentication problem as any other system using Windows security, i.e. the credentials used to connect to the target machine cannot be used to connect from the target machine to another resource requiring authentication. The most promising solution to this in PowerShell Remoting is CredSSP which enables credentials to be delegated but it has some challenges:

- It is not enabled by default, you need to execute Enable-WSManCredSSP or use Group Policy to configure the involved machines to support CredSSP.

- It is not available on Windows XP or Server 2003, a minor concern given that these OSes should be dead by now, but worth mentioning.

- PS Remoting requires CredSSP to be used with “fresh” credentials even though CredSSP as a technology supports delegating default credentials (this is how RDP uses CredSSP).

It is the last point about fresh credentials that kills CredSSP for me, I don’t want to persist another password for my non-interactive script to use when establishing a remoting connection. There is a bug on Microsoft Connect about this that you can vote up.

Instead I need to revert to the pre-CredSSP (and poorly documented) way of supporting multi-hop authentication with PS Remoting: Delegation. You basically require at least two things to be configured correctly in Active Directory for this to work.

- The user account that is being used to authenticate the PS Remoting session must have its AD attribute “Account is sensitive and cannot be delegated” unchecked.

- The computer account of the machine PS Remoting is connecting to must have either the “Trust this computer for delegation to any service” option enabled or have the “Trust this computer for delegation to specified services only” option enabled with a list of which services on which machines can passed delegated credentials. The latter is more secure if you know which services you’ll need.

I’m not sure which caches needed to expire because it took a while for these changes to start working for me but once they did After the PS Remoting target computer refreshed its Kerberos ticket (every 10 hours by default) I could use PS Remoting with the default authentication method (Kerberos) and could authenticate to resources beyond the connected machine. Rebooting the target computer or using the “klist purge” command against the target computer’s system account will force the Kerberos ticket to be refreshed.

With that hurdle overcome, the fun continues with the handling of the application’s service account credentials. PowerShell’s ConvertTo- and ConvertFrom-SecureString cmdlets enable me to encrypt the service account password using a Windows-managed encryption key specific to the current user, in my case this is the user performing the deployment and authenticating the PS Remoting session. As a one-time operation I ran an interactive PowerShell session as the deployment user and used `Read-Host -AsSecureString | ConvertFrom-SecureString` to encrypt the application service account password and I stored the result in a file alongside the deployment script.

At deployment time, the script uses ConvertTo-SecureString to retrieve the password from the encrypted file and configure the application’s service account. At least it worked with my interactive proof-of-concept testing. It failed to decrypt the password at deployment time with the error message “Key not valid for use in specified state”. After quite some digging I found the culprit.

The Convert*-SecureString cmdlets are using the DPAPI and the current user’s key when a key isn’t specified explicitly. DPAPI is dependent on the current user’s Windows profile being loaded to obtain the key. When using Enter-PSSession to do my interactive testing, the user profile is loaded for me but when using Invoke-Command inside my deployment script, the user profile is not loaded by default so DPAPI can’t access the key to decrypt the password. Apparently this is a known issue with impersonation and the DPAPI. It’s also worth noting that when using the DPAPI with a user key for a domain user account with a roaming profile, I found it needs to authenticate to the domain controller, a nice surprise for someone trying to configure delegation to specific services only.

My options now appear to be one of:

- Use the OpenProcessToken and LoadUserProfile win32 API functions to load the user profile before making DPAPI calls.

- Ignore the Convert*-SecureString cmdlets and call the DPAPI via the .NET ProtectedData class so I can use the local machine key for encryption instead of the current user’s key.

- Decrypt the password outside the PS Remoting session and pass the unencrypted password into the Remoting session ready to be used. I don’t like the security implications of this.

I’ll likely go with option (2) to get something working as soon as possible and look into safely implementing option (1) when I have more time.

Adopting PsGet as my preferred PowerShell module manager

I recently blogged about what features I think a PowerShell module manager should have and I briefly mentioned a few existing solutions and my current thoughts about them. A few people left comments mentioning some other options (which I’ve now looked into). I finished the post suggesting that it would be better to for me to contribute to one of the existing solutions than to introduce my own new alternative to the mix. So I did.

I chose Mike Chaliy‘s PsGet as my preferred solution (not to be confused with Andrew Nurse’s PS-Get) because I liked its current feature set, implementation, and design goals most. I have been committing to PsGet regularly, implementing the missing features I blogged about previously and anything else I’ve discovered as part of using it for my day job. My favourite contributions that I have made to PsGet so far include:

- Support for non-default values of the PSModulePath environment variable and for installing modules (and PsGet itself) to any path, ignoring the value of PSModulePath.

- Allow a hash (SHA256) of the module to be passed to Install-Module to ensure only trusted modules get installed as an alternative to Execution Policy and files marked with Internet Zone.

- Support for modules hosted on the public NuGet repository.

Since I adopted PsGet, Ethan Brown also contributed some important changes to support modules hosted on CodePlex, the PowerShell Community Extensions being a popular example. I’ve also been experimenting with integrating PsGet with the same web service that Microsoft’s new Script Explorer uses to download the wide selection of scripts and modules available from the TechNet Script Repository. PsGet also handles the scripts hosted on PoshCode well but I think we can improve the search capability.

Looking back at the list of requirements I made for a PowerShell module manager, this is what I think remains for PsGet, in order of most important first:

- Side-by-side versioning. While already achievable by overriding the module install destination, getting the concept of a version into PsGet is important, and will improve NuGet integration too.

- There is still a lot of work to be done to enable authors to more easily publish their modules. I’ve found publishing my own modules on GitHub and letting PsGet use the zipballs works well but it doesn’t handle modules than require compilation (eg implemented with C#).

- I still want to add opt-in support for marking downloaded modules as originating from the Internet Zone for those who want to use the features of Execution Policy. I believe the intent of Execution Policy is misunderstood by many and it serves a useful purpose. I might blog about this one day. This is quite a low priority feature though, especially as I recently discovered PSv2 ignores the zone on binary files.

I hope you’ll take a look at PsGet for managing installation of your PowerShell modules and provide your feedback to Mike, I and other contributors via the PsGet Issues list on GitHub.

Beware of unqualified PowerShell type literals

In PowerShell we can refer to a type using a type literal, eg:

[System.DateTime]

Type literals are used when casting one type to another, eg:

[System.DateTime]'2012-04-11'

Or when acessing a static member, eg:

[System.DateTime]::UtcNow

Or declaring a parameter type in a function, eg:

function Add-OneWeek ([System.DateTime]$StartDate) {

$StartDate.AddDays(7)

}

PowerShell also provides a handful of type accelerators so we don’t have to use the full name of the type, eg:

[datetime] # accelerator for [System.DateTime] [wmi] # accelerator for [System.Management.ManagementObject]

However, unlike a C# project in Visual Studio, PowerShell will let you load two identically named types from two different assemblies into the session:

Add-Type -AssemblyName 'Microsoft.TeamFoundation.Client, Version=10.0.0.0, Culture=neutral, PublicKeyToken=b03f5f7f11d50a3a' Add-Type -AssemblyName 'Microsoft.TeamFoundation.Client, Version=11.0.0.0, Culture=neutral, PublicKeyToken=b03f5f7f11d50a3a'

Both of the assemblies in my example contain a class named TfsConnection, so which version of the type is referenced by this type literal?

[Microsoft.TeamFoundation.Client.TfsConnection]

From my testing it appears to resolve to the type found in whichever assembly was loaded first, in my example above it would be version 10. However, a single script or module would be unlikely to load both versions of the assembly itself so you would likely encounter this situation when two different scripts or modules load conflicting versions of the same assembly, and in this case you won’t control the order in which each assembly is loaded so you can’t be sure which is first.

It is possible for a script to detect if a conflicting assembly version is loaded if it is expecting this scenario but the CLR won’t allow an assembly to be unloaded so all the script could do is inform the user and abort, or maybe spawn a child PowerShell process in which to execute.

It is also possible to have two identically named types loaded in PowerShell via another less obvious scenario. If you use the New-WebServiceProxy cmdlet against two different endpoints implementing the same web service interface, PowerShell generates and loads two different dynamic assemblies with identical type names (assuming you specify the same Namespace parameter to the cmdlet). I’ve run into this issue with the SQL Server Reporting Services web service.

To address this issue, referring to my first example, you can use assembly-qualified type literals, eg:

[Microsoft.TeamFoundation.Client.TfsConnection, Microsoft.TeamFoundation.Client, Version=11.0.0.0, Culture=neutral, PublicKeyToken=b03f5f7f11d50a3a]

However these quickly create scripts which are harder to read and maintain. For accessing static members you can assign the type literal to a variable, eg:

$TfsConnection11 = [Microsoft.TeamFoundation.Client.TfsConnection, Microsoft.TeamFoundation.Client, Version=11.0.0.0, Culture=neutral, PublicKeyToken=b03f5f7f11d50a3a] $TfsConnection11::ApplicationName

But for casting and declaring parameter types I don’t have a better solution. There are ways to add to PowerShell’s built-in type accelerator list but it involves manipulating non-public types which I wouldn’t feel comfortable using in a script or module I intend to publish for others to use.

For the New-WebServiceProxy situation, I have created a wrapper function which will reuse the existing PowerShell generated assembly if it exists.

Test Attachment Cleaner Least Privilege

I manage a Team Foundation Server 2010 instance with at least 30 Collections each with several Team Projects. Even after installing the TFS hotfix to reduce the size of test data in the TFS databases we still accrue many binary files care of running Code Coverage analysis during our continuous integration builds. As such it is still necessary to run the Test Attachment Cleaner Power Tool regularly to keep the database sizes manageable.

The Test Attachment Cleaner however is a command-line tool which requires the Collection Uri and the Team Project Name among several other parameters so I first needed to write a script (I chose PowerShell) to query TFS for all the Collections and Projects and call the Cleaner for each. I then needed to configure this script (and therefore the Cleaner) to run as a scheduled task and I needed to specify which user the task would run as.

The easiest answer would be to run the task as a user who has TFS Server Administrator privileges to ensure the Cleaner has access to find and delete attachments in every project in every collection but that would be overkill. I couldn’t find any existing documentation on the minimum privileges required by the Cleaner so I started with a user with zero TFS privileges and repeatedly executed the scheduled task, granting each permission mentioned in each successive error message until the task completed successfully.

For deleting attachments by extension and age I found the minimum permissions required were the following three, all at the Team Project level:

- View test runs

- Create test runs (non-intuitively, this permission allows attachments to be deleted)

- View project-level information

For deleting attachments based on linked bugs (something I didn’t try) I suspect the “View work items in this node” permission would also be required at the root Area level.

Having determined these permissions, I needed to apply them across all the Team Projects but it appears the only out-of-the-box way to set this permissions is via the Team Explorer user interface which becomes rather tedious after the first few projects. Instead I scripted the permission granting too via the TFS API and I’ve published some PowerShell cmdlets to make this easier if anyone else needs to do the same.

Implementing PowerShell modules with PowerShell versus C#

I think PowerShell is a great and powerful language with very few limitations and when implementing a Module for PowerShell my default language of choice has been PowerShell itself for several reasons:

- Many Modules evolve from refactoring and reuse of existing PowerShell scripts so it makes sense to use the existing code instead of rewriting it in another language.

- The Advanced Functions capability introduced by PowerShell 2.0 enables first-class Cmdlets and therefore Modules to be built, where as C# was required to achieve this in PSv1 days.

- PowerShell’s pipeline, native support for WMI, variety of drive providers, and loose type system makes it much quicker and cleaner to write certain kinds of code compared to C#.

- The feedback loop when developing and testing Modules written with PowerShell is much quicker when there is no compile step and you don’t have to worry about locking a Binary Module’s DLL file.

- Contributions such as bug fixes or new features from the wider PowerShell community are more likely if the Module is written with PowerShell as the source is immediately available and the users can use their own changes without additional steps.

However, as much as I believe in writing with PowerShell, for PowerShell wherever possible, I’ve been thinking that I may just have to consider using C# for developing PowerShell Modules more often because:

- Proxy functions are being promoted as a way for users to add features and fix “broken” behaviour in built-in Cmdlets. Modules developed with PowerShell will be affected by these changes and may introduce bugs unless every Cmdlet reference is fully qualified in the Module (eg Microsoft.PowerShell.Management\Get-ChildItem) which will lead to less maintainable code.

- Even with PSv2’s try{}catch{} structured error handling, I feel that the combination of terminating and non-terminating errors and ErrorActions can make writing resilient PowerShell scripts harder and less maintainable than the C# equivalent.

- Implementing common parameters across multiple Cmdlets is done through copy-and-paste with PowerShell instead of the cleaner inheritance approach possible in C#.

- Automated test frameworks for verifying module functionality are much more mature for C# than they are for PowerShell and even though a C# test framework could be used to test a Module implemented in PowerShell, I’m not sure this would be a pleasant development experience.

- Performance critical code can potentially be more efficient when implemented in C# instead of PowerShell (although we can embed chunks of C# directly in PowerShell scripts if necessary).

Ultimately I don’t think a definitive answer can be given one way or the other , it will depend on the nature of the module being developed. For example, one Module I have written is a wrapper for an existing .NET API and it doesn’t use many of the built-in PowerShell Cmdlets itself so it works quite well developed with PowerShell itself. As an alternate example, the PowerShell Community Extensions Module has a lot of code interacting with native APIs via P/Invoke and a large suite of automated tests that suits it well to being developed with C#.

Requirements for a PowerShell module manager

I write a lot of PowerShell scripts. Many of these get transformed into modules for reuse and occasionally published for others. However making these reusable modules available wherever my scripts may run and propagating updates to these modules is not easily managed.

As a solution to this I’ve been designing a PowerShell module to enable scripts and modules to easily reference and use other modules without requiring these modules to be installed first. The idea is very similar to RubyGems or Nuget but I feel there are some important criteria that need to be met by a PowerShell module manager.

Here is a list of requirements from the perspective of a module-consumer:

- PowerShell already defines standard install locations for modules so a module manager should make it easy to use these by default but still allow them to be overridden.

- Scripts will often run in a least privilege environment so a module manager should not require any special permissions to install a module.

- PowerShell’s Execution Policy depends on scripts that were downloaded from the Internet to be marked as such, a module manager should honour this by marking installed modules with the appropriate Zone Identifier.

- PowerShell allows multiple versions of a module to exist side-by-side and allows scripts to reference a specific version, a module manager must support this behaviour.

- A script should be able to use the module manager to install other modules even when the module manager itself hasn’t been installed yet (eg using a secure bootstrap)

- A script should be able to reference a module by name and optional version number only.

- The module manager should operate on a clean operating system with only PowerShell installed and not have any other pre-requisites.

As a module author, there is also a set of expectations from a module manager:

- A format for modules and their metadata is already defined by PowerShell itself (a PSD1 file with a collection of PSM1, PS1, and DLL files etc in a folder) so a module manager should leverage this instead of defining a new format. The format for transporting a module over the wire is an implementation concern of the module manager and not a concern of the module author.

- Publishing a new or updated module for others to consume should be a PowerShell one-line command that can consume any locally installed module.

- There should be a standard public repository for published modules but private repositories should also be supported for publishing modules intended for personal or enterprise use only.

There will inevitably be some impacts on how authors develop their modules to support the above requirements:

- To support the common PowerShell Execution Policy setting of RemoteSigned, published modules should be signed with a valid Code Signing Certificate issued by a recognised authority. As cheap as US$75.

- Modules must assume they will be executing in a least privilege environment and only operate with the minimum permissions required to perform the task the module is designed to do.

- Related to least privilege, modules should not require a “first-use” process to register or configure the module that would require elevation.

- Modules must not assume they will be installed to a specific location and instead should use the $PSScriptRoot variable for module-relative paths.

There are already some existing solutions for PowerShell module management, some meet most of the requirements above:

- Joel Bennett‘s PoshCode module – Been around the longest but currently only supports single-file modules (ie PSM1 files)

- Andrew Nurse‘s PS–Get – Almost a direct port of Nuget to PowerShell but requires PowerShell v2 to be configured to run under .NET 4.

- Mike Chaliy‘s PSGet – Probably the closest to my ideal but currently it requires a GitHub pull-request to list a module and the module author must also find somewhere to host their module.

I think adding my own solution to this list would only add to the module management problem, especially when you consider the other PowerShell module management solutions that exist but I’m not aware of. Instead it would make more sense to have these existing solutions converge by having them each support the installation of modules published by the others and move toward meeting as many of the requirements above as feasible.

Create a PowerShell v3 ISE Add-on Tool

Note: This process is based on PowerShell v3 CTP 2 and is subject change.

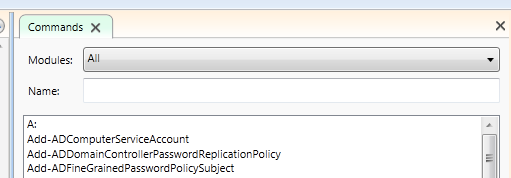

When you open PowerShell v3’s ISE (Integrated Scripting Environment) you should see a new Commands pane that wasn’t present in version 2.

This is a built-in example of an ISE Add-on Tool but you can also create your own quite easily. At its simplest an ISE Add-on Tool is a WPF Control that implements the IAddOnToolHostObject interface. To get started writing your own add-on follow these simple steps:

- Open Visual Studio and create a new WPF User Control Library project. The new project should contain a new UserControl1.

- Add a new Project Reference to the Microsoft.PowerShell.GPowerShell (version 3.0) assembly located in the GAC.

- Open the UserControl1.xaml.cs code-behind file and change the UserControl1 class to implement the IAddOnToolHostObject interface.

- The only member of this interface is the HostObject property which can be declared a a simple auto-property for now.

- Build the project and find the path of the DLL it produces.

- Open the PowerShell v3 ISE and load the DLL you have just built, eg:

Add-Type -Path ‘c:\users\me\documents\project1\bin\debug\project1.dll’ - Add the UserControl to the current PowerShell tab’s VerticalAddOnTools collection and make it visible (replace “Project1.UserControl1” below with the full namespace of your control):

$psISE.CurrentPowerShellTab.VerticalAddOnTools.Add(‘MyAddOnTool’, [Project1.UserControl1], $true)

After following these steps you should see a new, blank pane as an extra tab where the Commands pane normally appears and now you can return to Visual Studio and start adding to the appearance and behaviour of your new add-on tool. There are some other things worth knowing about developing ISE Add-On Tools:

- When your UserControl is added to the ISE, the HostObject property is set to an object almost identical to the $psISE variable and your control uses this to interact with the ISE itself. For example you can manipulate text in the editor, or you can register for events that tell your control when a new file is opened.

- While your UserControl is loaded in the ISE, you cannot build in Visual Studio because the DLL is locked and you need to close the ISE to unlock the file. I recommend adding a small PowerShell script to your project that performs steps 6 and 7 above and change your project’s Debug options in Visual Studio so that the ISE is started and your script is opened when you press F5.

- In step 7 above, your control is added to the VerticalAddOnTools collection but there is also a HorizontalAddOnTools collection and the user can move your control between these two at any time via the ISE menus so make sure you design the appearance of your Add-On Tool to work in both orientations.

Alternatively the Show-UI module for PowerShell includes a ConvertTo-ISEAddOn cmdlet to create the necessary WPF objects natively from PowerShell and avoid the need for a Visual Studio project.

Executing individual PowerShell 2 commands using .NET 4

One of the many great things about PowerShell is that it can utilise the .NET framework directly and third party .NET libraries whenever PowerShell doesn’t offer a native solution. However, the PowerShell console, the Integrated Scripting Environment (ISE) and PS-Remoting in PowerShell 2.0 are all built for use with .NET 2.0 through to .NET 3.5. With the release of version 4 of the .NET Framework though, there is an increasing amount of core functionality and third-party assemblies that are not accessible from PowerShell — the new Is64BitOperatingSystem property on the System.Environment class is a simple example.

So, given that .NET 4 has excellent support for being able to run most .NET 2 assemblies but PowerShell doesn’t have officially support for .NET 4 yet, how can we safely utilise new .NET 4 functionality from PowerShell without waiting for a new PowerShell release from Microsoft? A quick search of the web will reveal a few different approaches, each with their own major drawbacks:

- Change the system-wide registry setting to load all .NET 2 assemblies under .NET 4 instead for all .NET applications.

- Change the system-wide config files for the PowerShell console, ISE, and Remoting service to use .NET 4 instead for all PowerShell sessions.

- Build a small .NET 4 application to host a PowerShell Runspace in-process as .NET 4.

These options are either have too wide an effect or vary too much from the standard PowerShell experience for me to adopt. What if there was a way, from inside a standard PowerShell 2 session to execute a single command, script block, or script under .NET 4 without touching any upfront configuration, without requiring elevated permissions, and without affecting anything else on the system?

This solution is available in the form of the new Activation Configuration Files feature also introduced in .NET Framework 4.0. By dynamically creating a small config file in a temporary directory then setting a process-scoped environment variable we can easily start a new .NET 4 PowerShell session passing in a ScriptBlock and some arguments and receive the same deserialized objects in return as you would see when using Remoting.

I’ve implemented my first version of this technique as a PowerShell script module available on Gist.GitHub. It includes an Invoke-CLR4PowerShellCommand Cmdlet which behaves just like a very simple version of the built-in Invoke-Command Cmdlet. The module also includes a Test-CLR4PowerShell function demonstrating basic use of the module.

Query all file references in a Visual Studio solution with PowerShell

Today I was working on introducing Continuous Integration to a legacy code base and was discovering the hard way that the solution of about 20 projects had many conflicting references to external assemblies. Some assemblies were different versions, others the same version but in different paths, and others completely missing altogether. Needless to say this wasn’t going to build cleanly on a build server.

Rather than manually checking the path and version of every assembly referenced by every project in the solution, I wrote a PowerShell script to parse a Visual Studio 2010 solution file, identify all the projects, then parse the project files for the reference information. The resulting Get-VSSolutionReferences.ps1 script is available on GitHub.

Once I had a collection of objects representing all the assembly references I could perform some interesting analysis. Here is a really basic example of how to list all the assemblies referenced by two or more projects:

$Refs = & .\Get-VSSolutionReferences.ps1 MySolution.sln

$Refs | group Name | ? { $_.Count -ge 2 }

It doesn’t take much more to find version mismatches or files that don’t exist at the specified paths, but I’ll leave that as an exercise for you, the reader.