Category: TFS

Rules to Customising a .NET Build

It doesn’t take long before any reasonable software project requires a build process that does more than the IDE’s default Build command. When developing software based on the .NET platform there are several different ways to extend the build process beyond the standard Visual Studio compilation steps.

Here is a list for choosing the best place to insert custom build steps into the process, with the simplest first and the least desirable last:

- Project pre- and post-build events: There is a GUI, you have access to a handful of project variables, can perform the same tasks as a Windows Batch script, and still works with Visual Studio’s F5 build and run experience. Unfortunately only a failure of the last command in a batch will fail the build and the pre-build event happens before project references are resolved so avoid using it to copy dependencies. Continue reading

Override the TFS Team Build OutDir property

Update: with .NET 4.5 there is an easier way.

A very common complaint from users of Team Foundation Server’s build system is that it changes the folder structure of the project outputs. By default Visual Studio puts all the files in each project’s respective /bin/ or /bin/<configuration>/ folder but Team Build just uses a flat folder structure putting all the files in the drop folder root or, again, a /<configuration>/ subfolder in the drop folder, with all project outputs mixed together.

Additionally because Team Build achieves this by setting the OutDir property via the MSBuild.exe command-line combined with MSBuild’s property precedence this value cannot easily be changed from within MSBuild itself and the popular solution is to edit the Build Process Template *.xaml file to use a different property name. But I prefer not to touch the Workflow unless absolutely necessary.

Instead, I use both the Solution Before Target and the Inline Task features of MSBuild v4 to override the default implementation of the MSBuild Task used to build the individual projects in the solution. In my alternative implementation, I prevent the OutDir property from being passed through and I pass through a property called PreferredOutDir instead which individual projects can use if desired.

The first part, substituting the OutDir property for the PreferredOutDir property at the solution level is achieved simply by adding a new file to the directory your solution file resides in. This new file should be named following the pattern “before.<your solution name>.sln.targets”, eg for a solution file called “Foo.sln” then new file would be “before.Foo.sln.targets”. The contents of this new file should look like this. Make sure this new file gets checked-in to source control.

The second part, letting each project control its output folder structure, is simply a matter of adding a line to the project’s *.csproj or *.vbproj file (depending on the language). Locate the first <PropertyGroup> element inside the project file that doesn’t have a Condition attribute specified, and the locate the corresponding </PropertyGroup> closing tag for this element. Immediately above the closing tag add a line something like this:

<OutDir Condition=" '$(PreferredOutDir)' != '' ">$(PreferredOutDir)$(MSBuildProjectName)\</OutDir>

In this example the project will output to the Team Build drop folder under a subfolder named the same as the project file (without the .csproj extension). You might choose a different pattern. Also, Web projects usually create their own output folder under a _PublishedWebSites subfolder of the Team Build drop folder, to maintain this behaviour just set the OutDir property to equal the PreferredOutDir property exactly.

You can verify if your changes have worked on your local machine before checking in simply by running MSBuild from the command-line and specifying the OutDir property just like Team Build does, eg:

msbuild Foo.sln /p:OutDir=c:\TestDropFolder\

Queue another Team Build when one Team Build succeeds

Update: with Team Build 2013 you can even pass parameters to queued builds.

I have seen several Team Foundation Server environments where multiple build definitions exist in a single project and need to executed in a particular order. Two common techniques to achieve this are:

- Queue all the builds immediately and rely upon using a single build agent to serialize the builds. This approach prevents parallelization for improved build times and continues to build subsequent builds even when one fails.

- Check build artifact(s) into source control at the end of the build and let this trigger subsequent builds configured for Continuous Integration. This approach can complicate build definition workspaces and committing build artifacts to the same repository as code is not generally recommended.

As an alternative I have developed a simple customization to TFS 2010’s default build process template (the DefaultTemplate.xaml file in the BuildProcessTemplates source control folder) that allows a build definition to specify the names of subsequent builds to queue upon success. It only requires two minor changes to the Xaml file. The first is a line inserted immediately before the closing </x:Members> element near the top of the file:

<x:Property Name="BuildChain" Type="InArgument(s:String[])" />

The second is a block inserted immediately before the final closing </Sequence> at the end of the file

<If Condition="[BuildChain IsNot Nothing AndAlso BuildChain.Length > 0]" DisplayName="If BuildChain defined">

<If.Then>

<If Condition="[BuildDetail.CompilationStatus = Microsoft.TeamFoundation.Build.Client.BuildPhaseStatus.Succeeded]" DisplayName="If this build succeeded">

<If.Then>

<Sequence>

<Sequence.Variables>

<Variable x:TypeArguments="mtbc:IBuildServer" Default="[BuildDetail.BuildDefinition.BuildServer]" Name="BuildServer" />

</Sequence.Variables>

<ForEach x:TypeArguments="x:String" DisplayName="For Each build in BuildChain" Values="[BuildChain]">

<ActivityAction x:TypeArguments="x:String">

<ActivityAction.Argument>

<DelegateInArgument x:TypeArguments="x:String" Name="buildChainItem" />

</ActivityAction.Argument>

<Sequence DisplayName="Queue chained build">

<Sequence.Variables>

<Variable Name="ChainedBuildDefinition" x:TypeArguments="mtbc:IBuildDefinition" Default="[BuildServer.GetBuildDefinition(BuildDetail.TeamProject, buildChainItem)]"/>

<Variable Name="QueuedChainedBuild" x:TypeArguments="mtbc:IQueuedBuild" Default="[BuildServer.QueueBuild(ChainedBuildDefinition)]"/>

</Sequence.Variables>

<mtbwa:WriteBuildMessage Message="[String.Format("Queued chained build '{0}'", buildChainItem)]" Importance="[Microsoft.TeamFoundation.Build.Client.BuildMessageImportance.High]" />

</Sequence>

</ActivityAction>

</ForEach>

</Sequence>

</If.Then>

</If>

</If.Then>

</If>

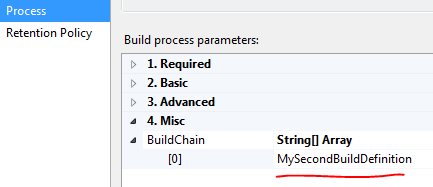

You can see the resulting DefaultTemplate.xaml file here. After applying these changes and checking-in the build process template file you can specify which builds to queue upon success via the Edit Build Definition windows in Visual Studio:

You can specify the names of multiple Build Definitions from the same Team Project each on a separate line. When the first build completes successfully, all builds listed in the BuildChain property will then be queued in parallel and processed by the available Build Agents. No checking is currently done for circular dependencies so be careful not to chain a build to itself directly or indirectly and create an endless loop.

Fail a build when the warning count increases

I like to set the “Treat warnings as errors” option in all my Visual Studio projects to “All” to ensure that the code stays as clean and maintainable as possible and issues that may not be noticed until runtime are instead discovered at compile time. However, on existing projects with a large number of compiler warnings already present in the code base, turning this option on will immediately fail all the CI builds and either create a lot of work for the team before the builds are passing again or the team will start ignoring the failed builds. Neither is a desirable situation.

On the other hand, unless someone is actively monitoring the warnings and notifying the team when new compile warnings are introduced, the technical debt is just going to increase. To address this I thought it would be useful to extend the default build process template in TFS 2010 to compare the current build’s warning count with the warning count from the previous build and fail the build if the number of warnings has increased. The Xaml required for this can be found here. Hopefully this strategy will result in the team slowly decreasing the warning count down to zero and then the “Treat warnings as errors” option can be enabled to prevent new compiler warnings being introduced to the code base.

At the moment this is a very naive implementation – if an increase in warnings fails one build, subsequent builds will pass unless the warning count increases again. I have two ideas for improving this:

- Compare the current warning count against the minimum warning count of all previous builds.

- Fail if the minimum warning count has not decreased within a specified time period (eg two weeks).

If I implement either of these two ideas, I’ll update this post but they should both be quite easy to do-it-yourself.

Fail a Lab build when the environment is in use

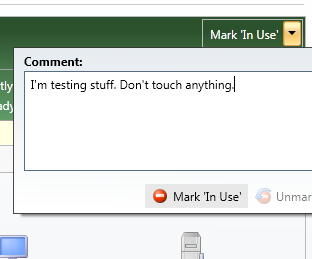

Microsoft Test Manager in Team Foundation Server 2010 enables users to mark a lab environment as “in use” with their name, time stamp, and a comment.

Microsoft Test Manager in Team Foundation Server 2010 enables users to mark a lab environment as “in use” with their name, time stamp, and a comment.

Other people can then see this and contact the user first if they’d also like to test, redeploy or otherwise interrupt a lab environment.

However, if a user manually queues a Lab build from Visual Studio Team Explorer, or a Lab build is triggered by a schedule or a check-in, this marker is not visible and the Lab build will execute, redeploying the environment and most likely upsetting the user who was already using the environment.

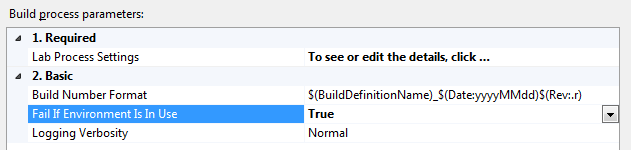

As a solution to this, I have modified the default lab build process template to include a new parameter to specify whether the build should fail if the environment has been marked as “in use”.

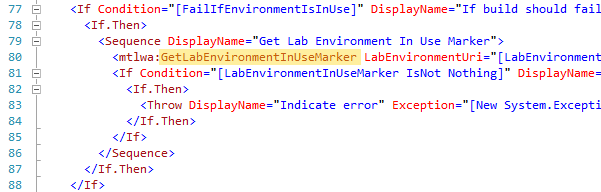

Thankfully, most of the hard work has already been done in the form of the out-of-the-box Workflow activities for Lab Management, and I just needed to add a small chunk of XAML to the existing LabDefaultTemplate.xaml file in my Team Project’s “BuildProcessTemplates” source control folder. The full customised template is here, but the core of the change was this simple code:

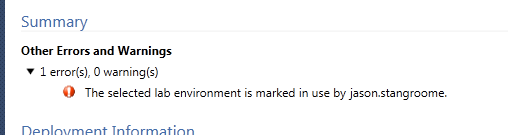

And now the lab build will fail when the environment is marked “in use”…

Automatic TFS Check Out for PowerShell ISE

I work with a lot of PowerShell scripts, it’s the fun part of my job. I do most of my editing using the PowerShell ISE, primarily because it is the default, but also because it has great syntax highlighting, tab completion, and debug support. Additionally, all of my scripts are in source control: sometimes Mercurial, occasionally Git, but mostly Team Foundation Server (TFS).

The way TFS works though, is that all files in your local workspace are marked as read-only until you Check-Out for editing. When working on TFS-managed files in Visual Studio, the check-out is done automatically as soon as you begin typing in the editor. The PowerShell ISE however has no idea about TFS and will let you edit to your heart’s content until you eventually try to save your changes and it fails due to the read-only flag.

But I’ve developed a solution…

I’ve written a PowerShell ISE-specific profile script that performs a few simple things:

- Checks if you have the TFS client installed (eg Team Explorer).

- Registers for ISE events on each open file and any files you open later.

- Upon editing of a file, if it is TFS-managed then checks it out.

The end result is the same TFS workflow experience from within the PowerShell ISE as Visual Studio provides.

Export all report definitions for a Team Project Collection

Team Foundation Server 2010 introduced Team Project Collections for organising Team Projects into groups. Collections also provide a self-contained unit for moving Team Projects between servers and this is well documented and supported.

However, if you’ve ever tried moving a Team Project Collection you’ll find the documentation is a long list of manual steps, and one of the more tedious steps is Savings Reports. This step basically tells you to use the Report Manager web interface to manually save every report for every Team Project in the collection as an .RDL file. A single project based on the MSF for Agile template will contain 15 reports across 5 folders, so you can easily spend a while clicking away in your browser.

To alleviate the pain, I’ve written a PowerShell script which accepts two parameters. The first is the url for the Team Project Collection, and the second is the destination path to save the .RDL files to. The script will query the Team Project Collection for its Report Server url and list of Team Projects via the TFS API, then it will use the Report Server web services to download the report definitions to the destination, maintaining the folder hierarchy and time stamps. You can access this script, called Export-TfsCollectionReports, on Gist.

Obviously, when you reach the step to import the report definitions on the new server, you’ll want a similar script to help. Unfortunately, I haven’t written that one yet but I will post it to my blog when I do. In the mean time you could follow the same concepts used in the export script to write one yourself.

Providing seamless external access to Team Build drops

There are a few articles describing how to configure your Team Foundation Server to be accessible externally. These articles focus on exposing the TFS web services, the SharePoint team project portals, Reporting Services, Team System Web Access and, in TFS 2010, even Lab Management, via public HTTP(S) urls.

The primary TFS resource omitted from such articles is how to expose the build outputs externally too, so that clicking the Open Drop Folder and View Log File links in the Visual Studio Build Explorer just work wherever you are.

My solution is based on the feature of modern versions of Windows (ie XP and later) to automatically try WebDAV when the standard file share protocol (SMB/CIFS) fails to connect to a given UNC path. Simply, you configure a WebDAV URI (using the IIS 7.5 WebDAV module in my case) that corresponds directly to the file share UNC used for build drops. That is, if your build drop path is \\myserver\myshare\ then the WebDAV URI must be http://myserver/myshare/.

While build drops can be hosted on any file server, I usually see the Team Foundation server itself, or even the build agents used as the location for the drop share. Having the WebDAV server and the drop file server as the same machine simplifies the setup (for the reasons below) but installing WebDAV on your build agent just complicates a build environment that should be kept as clean as possible, so I’d recommend against using the agent for the drop location. In my case I opted for a dedicated build drop file server, but you can just as easily use the TFS server if your infrastructure resources are limited.

When configuring the WebDAV module for HTTP we use Windows Authentication for granting access and this works great for resources on the WebDAV server. However, from my experience*, if the WebDAV site itself, or a virtual subdirectory, is mapped to a remote file share, impersonation of the credentials provided by Windows Authentication fails. If you switch to Basic Authentication though, access to resources not local to the WebDAV server then works, but Basic Authentication has its own problems.

With Basic Authentication, the user’s password is sent over the wire, so WebDAV refuses to allow it by default unless you also use SSL. Sadly, the Windows WebDAV redirector won’t automatically try HTTPS for UNC paths unless the server name is suffixed with “@ssl” (eg \\myserver@ssl\myshare\) and this unfortunately forces WebDAV to be used even when internal systems do have direct access to the file share.

Another small catch with using WebDAV to expose drop folders is that they often contain “web.config” files and IIS naturally tries to parse these files typically breaking the ability to browser to those folders. The solution I found from Steve Schofield was to set the “enableConfigurationOverride” property to “false” for the Application Pool used by the WebDAV IIS Site. This property isn’t available via the Management Console so you’ll have to use “appcmd” or edit the “applicationHost.config” file directly.

Update: With Windows 7 or Vista, a registry change is also required to add the WebDAV server’s FQDN to a list of servers to forward credentials to. It’s all documented in the Microsoft KB article 943280.

Finally, to complete the seamless experience, the drop file server should be referenced by the same fully-qualified domain name (FQDN) both internally and externally (eg \\myserver.mydomain.com\myshare\). The first step for this is to ensure that the drop file server itself will authenticate via the FQDN locally, especially if it’s the TFS server. This is done by adding the FQDN to the server’s BackConnectionHostNames registry key. Secondly, ensure that the FQDN resolves correctly for both internal and external users. In my case I configured Split DNS to resolve the FQDN to an internal IP address for internal users and a public IP for the public, keeping the routes as short as possible.

You can now change your build definitions to use the FQDN UNC as the default drop location and all future build outputs should be reachable via the same path wherever you are.

*It may be possible to get Windows Authentication impersonation to work for resources not hosted on the WebDAV server by granting the correct delegation permissions but I would need to do more research.

Team Build 2010 process file management

When you create a new Team Foundation Server 2010 Team Project from the standard MSF process templates and choose the defaults, you will be given a BuildProcessTemplates folder in your new project’s source control root. In this folder you should discover three Xaml files, corresponding to each of the out-of-the-box build types: default, 2008-compatible build, and Lab build.

If you then create a new Build Definition for your team, the Process page will default to the “Default Template”. If you expand for more details, you’ll see a drop-down of the three choices or a New button. It’s not until you click the New button that it’s obvious you can still put your build files anywhere in source control, just like TFS 2008. Upon creating a new process file you should also discover the drop-down list will include your new file, even if you don’t store it in BuildProcessTemplates folder.

Until your build definitions exceed the limitations of the out-of-the-box process files, it is often fine to use them where they are and just adjust the Parameters property grid. As soon as you want to customise the build process itself, you should move to a more manageable arrangement for these files. My preferred structure is basically to create a new Builds folder under your Trunk folder (or “Main” or whatever you call your branching root) and then branch the base template file (eg DefaultTemplate.xaml) into this new Builds folder with a more descriptive file name (eg MyNightlyBuild.xaml) and customise it there.

Unfortunately, while the Build Definition window has a Copy button to create a new process file to work on in a single step, copying doesn’t maintain a relationship between your new file and the original. This is important because now in 2010, the build files contain the full build process (not just the overrides like TfsBuild.proj files did) and they therefore much more closely resemble the Microsoft.TeamFoundation.Builds.targets file from TFS 2008 and are subject to servicing. By branching, when a service pack introduces new versions of the templates, you will be able to merge the updates through to your customised files. And just like the 2008 targets files, you should avoid reverse-integrating your customisations back to the originals.

In addition to leaving the original files unchanged, there are other reasons why you should put your process files in a Build folder below your trunk:

- When you branch source, you should branch build process too. Keeping them below your branching root makes this automatic.

- If you want to use reference custom build activities, you can no longer just double-click the Xaml file in Source Control to edit it. Instead you need to put your Xaml file in a project that has project references to the activity assemblies. The build folder gives you a perfect place to put the project file and optionally add it to your solution.

- Finally, customised builds will often reference other external files (eg PowerShell scripts) and the build folder is a good place for them too.

If you’ve found any other good practices with managing TFS 2010 build process files, feel free to leave a comment.

Code Coverage Delta with Team System and PowerShell

My last post documented my first venture into working with Visual Studio’s Code Analysis output with PowerShell to find classes that need more testing. Since then I’ve taken the idea further to analyse how coverage has changed over a series of builds from TFS.

What resulted is a PowerShell script that takes, as a minimum, the name of a TFS Project and the name of a Build Definition within that project. The script will then, by default, locate the two most recent successful builds, grab the code coverage data from the test runs of each of those builds and output a list of classes whose coverage has changed between those builds, citing the change in the number of blocks not covered.

Additional parameters to the script allow partially successful or failed builds to be considered and also to analyse coverage change over a span of several builds rather just two consecutive builds.

The primary motivator behind developing this script was to be able to identify more accurately where coverage was lost when a new build has an overall coverage percentage lower than the last. This then helps to locate, among other things, where new code has been added without testing or where existing tests have been deleted or disabled.

A code base with a strong commitment to the Single Responsibility Principle should find class-level granularity sufficient but extending the script to support method-level reporting should be trivial given that the Coverage Analysis DataSet already includes all the required information.

The script requires the Team Foundation Server PowerShell Snapin from the TFS October 2008 Power Tools and the Visual Studio Coverage Analysis assemblies presumably available in Visual Studio 2008 Professional or higher. These dependencies only support 32-bit PowerShell so my script unfortunately suffers the same constraint.

Download the script here, and use it something like this:

PS C:\TfsWorkspace\> C:\Scripts\Compare-TfsBuildCoverage.ps1 -project Foo -build FooBuild | sort DeltaBlocksNotCovered